Mech Interp Workshop: Opening the black box of a neural net

Ever wonder how neural nets actually do some of their computation? Wanted to know more about what this “mechanistic interpretability” thing is and how it relates to AI safety, but don’t have experience with building LLMs from scratch? This is the workshop for you!

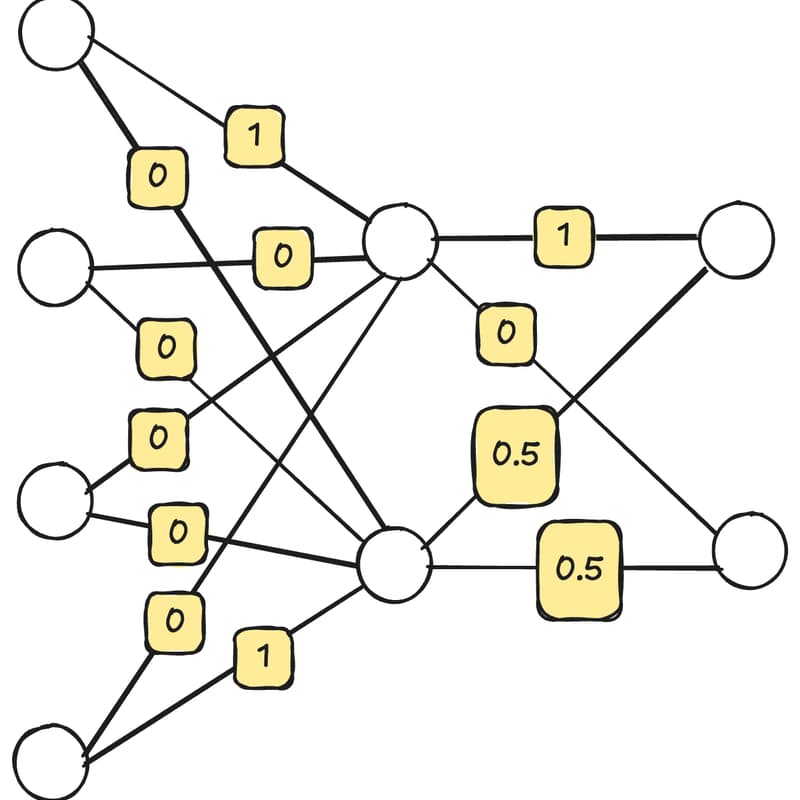

We’ll be analyzing how simple neural nets perform image recognition. This workshop is meant for people who have previously built and trained their own simple neural nets previously, but does not require LLM knowledge. We will also assume Python knowledge and some basic familiarity with PyTorch.

This workshop will consist of an introduction talk into mechanistic interpretability of simple neural nets, some guiding principles, and then a hands-on exercise where we actually do some interpretability exercises.

Please bring a computer for the coding exercises!