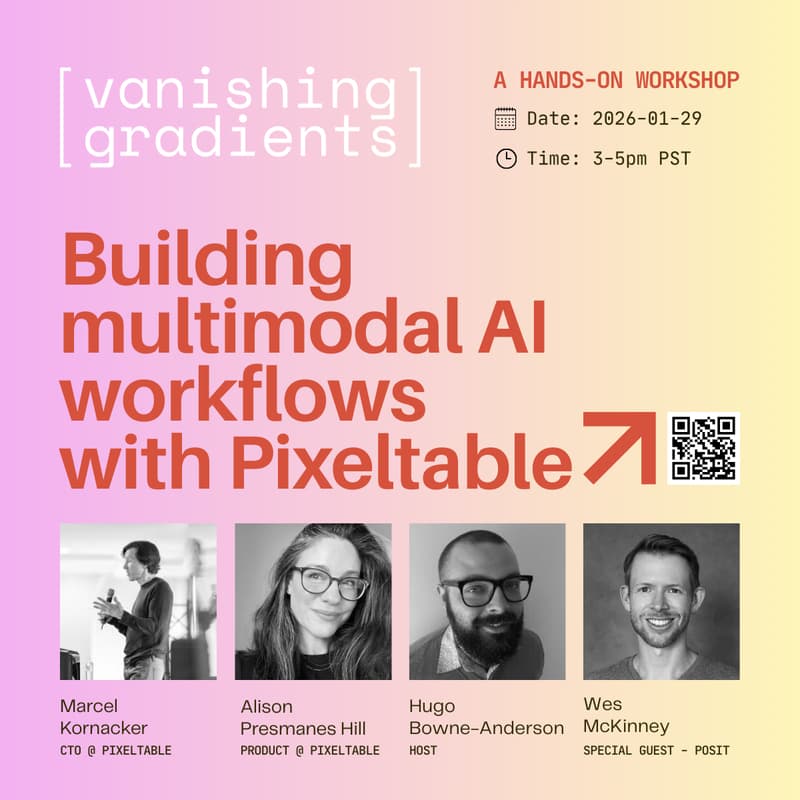

Building Multimodal AI Workflows with Pixeltable

The challenge with multimodal AI isn't calling models. It's everything else. Videos need to become frames. Audio needs transcription. Embeddings need to stay in sync when new data gets added. Generated outputs need to feed back into processing workflows.

In this free live workshop with Marcel Kornacker (Pixeltable CTO, co-creator of Apache Parquet and Impala), Alison Hill (Pixeltable, former product lead for R Markdown, Quarto, and conda), and Hugo Bowne-Anderson (Vanishing Gradients), you'll see how to skip the data plumbing and focus on building with Pixeltable, an open source Python library designed for multimodal workflows.

Special guest Wes McKinney (Posit, Creator of pandas), will also join at the end of the workshops for a fireside chat about why multimodal data needs a fundamentally different approach to support AI workflows.

Most modern approaches separate storage (databases and object stores) from orchestration (workflow tools), which means writing a series of custom scripts to wire them together. That's where 80-90% of development effort goes today. Pixeltable combines storage and orchestration: it's a database that knows how to run transformations and where to store (and retrieve) the results. Think of it as the system you'd build for yourself if you had time to create high-throughput, low-latency data infrastructure from scratch, with native support for all multimodal data types. Instead of spending weeks wiring together S3, vector databases, model APIs, and retry logic, you get a complete system where storage and orchestration just work together.

In this hands-on session, you'll:

Use generative models to work with multimodal data like videos, images, and audio as inputs

Compose and remix new media from model outputs using functions like video segmentation, captioning, and text/audio overlays

Build workflows optimized for minimal latency and maximal throughput

Handle transformations across media types without juggling files, frameworks, or for loops

Share your multimodal data (inputs, schema, & generated outputs) in Pixeltable Cloud

Workshop Flow

We'll build a complete workflow step by step:

Phase 0: Setup - get Pixeltable running locally with sample videos

Phase 1: Tables for Multimodal Data - store videos, extract frames and audio, build your base table

Phase 2: Build and Iterate - create views, add computed columns, use generative models, watch everything update automatically

Phase 3: Share and Collaborate - replicate your complete workflow to Pixeltable Cloud

What You'll Learn

How to build complex workflows for multimodal data (not just basic input→model→output)

Why parallelizing generative model calls matters for production workflows

How to extract, query, and reuse intermediate outputs (timestamps, segments, transcripts)

Why unifying storage and orchestration beats stitching together separate systems with scripts

How to share reproducible multimodal datasets with all inputs, outputs, and metadata intact

Requirements

Python 3.9+ and pip (we'll walk through installation)

Sample videos provided (or bring your own)

API keys for generative models (we'll provide temporary keys for the workshop)

No prior database or ML ops experience required

Bring your laptop. This is fully hands-on.

If you can't make it, register and we'll share the recording after.