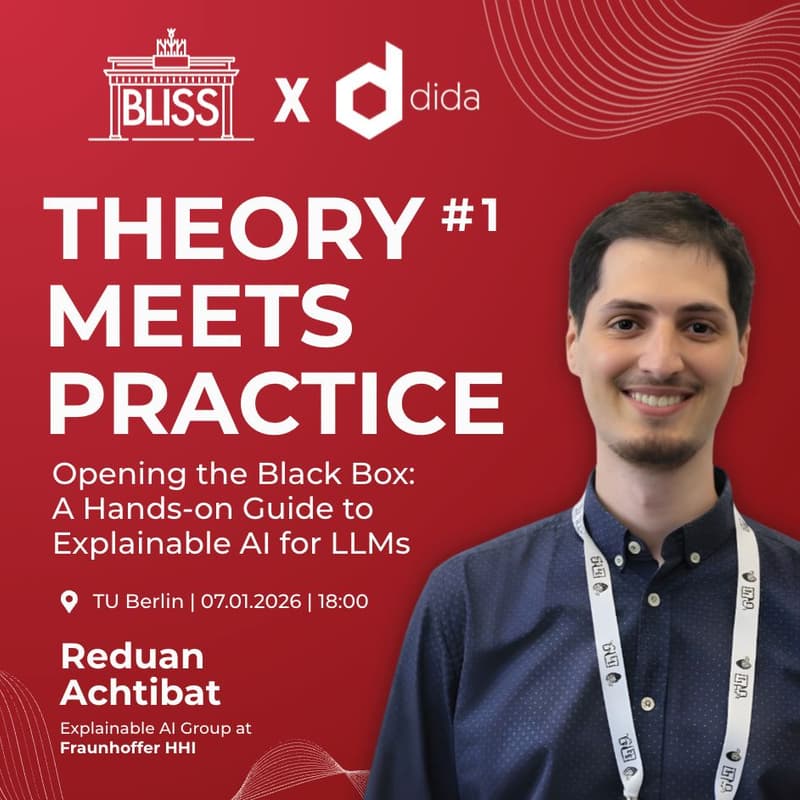

Theory Meets Practice #1: Opening the Black Box with LRP: A Hands-On Guide to Explainable AI for LLMs

We are excited to host the first workshop by dida for BLISS in the "dida x BLISS: Theory meets practice" Series. The workshop will feature Reduan Achtibat from the Explainable AI Group at the Fraunhofer HHI, who will guide us through an interactive session on the emerging field of explainability for LLMs.

Title: Opening the Black Box with LRP: A Hands-On Guide to Explainable AI for LLMs

📅 Date: 7th January

🕕 Time: 18:00

📍 Location: TU Berlin Marchstrasse 23 [room 0.011]

The session will last around 2 hours, followed by a networking session with dida and fellow AI enthusiasts (and free pizza!🍕). Bring your laptop!

Abstract: While Large Language Models have demonstrated unprecedented capabilities in reasoning and retrieval, their internal decision-making processes remain largely opaque. As they grow in complexity, treating these models as 'black boxes' is no longer sufficient for building reliable, safe, and transparent systems.

Hosted by the Explainable AI group at Fraunhofer HHI, this workshop offers a practical, hands-on deep dive into the inner workings of Transformer models. We will demonstrate how to apply advanced attribution and analysis methods to demystify how LLMs process information, store knowledge, and generate predictions.

In this session, we will cover:

Faithful Attribution: Going beyond input-level explanations to trace the model's reasoning from input tokens through latent concepts to the final prediction with Layer-wise Relevance Propagation.

Anatomy of In-Context Learning: Identifying how specific attention heads retrieve information from context versus storing factual knowledge.

Control and Source Tracking: Intervening in latent representations to steer model generation and trace information sources.

Logistics: Please bring your laptop to code along! We’ll provide the environment via Google Colab.

Join us to bridge the gap between model performance and model understanding.

Who is this event for?

This workshop is open to everyone curious about Explainability - including students, PhD candidates, and professionals! A basic understanding of Python and LLMs is all you need to participate.

We are BLISS e.V., Berlin’s AI community connecting like-minded individuals passionate about machine learning and data science. Our BLISS Workshops connect students and young professionals with industry partners, offering an inside look into how machine learning is applied in real-world settings - from research and development to deployment.

We are dida, scientific engineers who believe that reliable AI should not be a "black box". That is why we prioritize transparency, mathematics, and code over hype. By bridging the gap between theoretical research and production, we develop custom white-box AI solutions that are fully explainable, rigorously engineered, and free of "magic".

dida Website: https://dida.do

dida Youtube: https://www.youtube.com/@dida-do

BLISS Website: https://bliss.berlin

BLISS Youtube: https://www.youtube.com/@bliss.ev.berlin