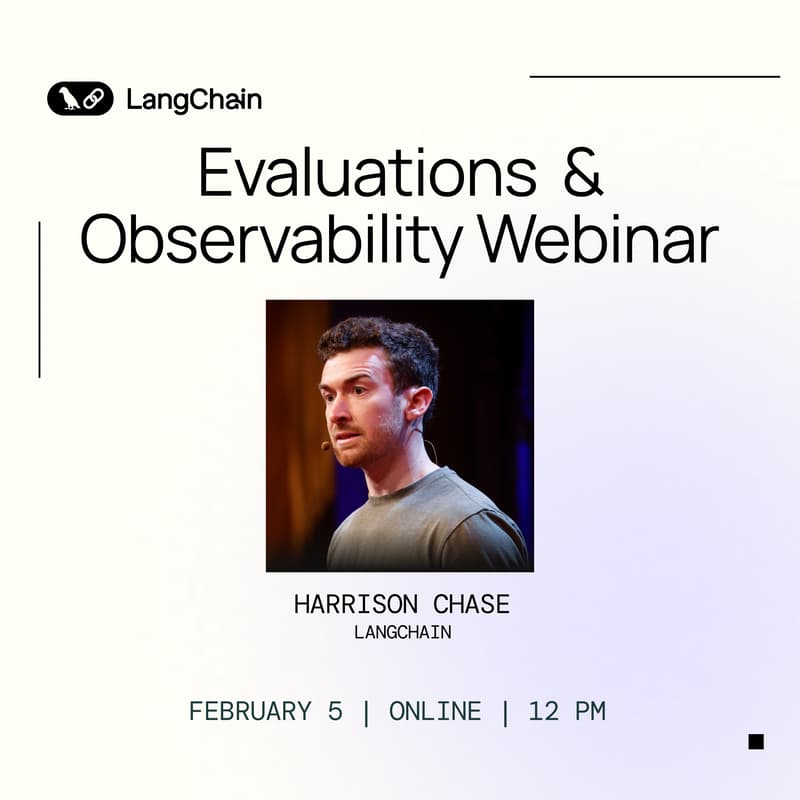

Webinar: Evaluations & Observability for AI Agents

Join Harrison Chase, Co-founder and CEO of LangChain, for a technical deep dive into how teams are debugging, evaluating, and operating AI agents in production.

As agents grow more complex and long-running, failures are no longer about broken code- they’re about broken reasoning. This session will focus on how tracing and evaluation work together to understand agent behavior, diagnose where reasoning goes wrong, and systematically improve agent reliability.

We’ll cover practical approaches to observability and evaluation for multi-step agents, including how to reason about trajectories, tool usage, state, and efficiency- drawing on real-world examples from teams running agents in production.

This webinar is designed for engineering practitioners and technical leaders who are actively building or scaling agent-based systems and want concrete guidance on what works in practice.

Agenda:

12:00pm: Evaluations & Observability for Agents

12:30pm: Q&A session

About LangSmith:

We provide the agent engineering platform and open source frameworks to help companies ship reliable agents. LangSmith powers top engineering teams like Cisco, Klarna, Clay, Blackrock, LinkedIn, and more. Observe, evaluate, and deploy agents with LangSmith, our comprehensive platform for agent engineering. Learn more: https://www.langchain.com/