Introducing UX Evals

AI-powered products don’t behave like traditional software — but most teams are still evaluating them as if they do.

Benchmarks and classic usability methods weren’t designed for conversational, adaptive, multi-turn experiences. Heavy reliance on these methods means that teams still don’t know whether their AI products are actually helping users.

This live workshop introduces UX Evals — a new research method for evaluating consumer-facing AI through real user experience.

Read more about the new methodology here.

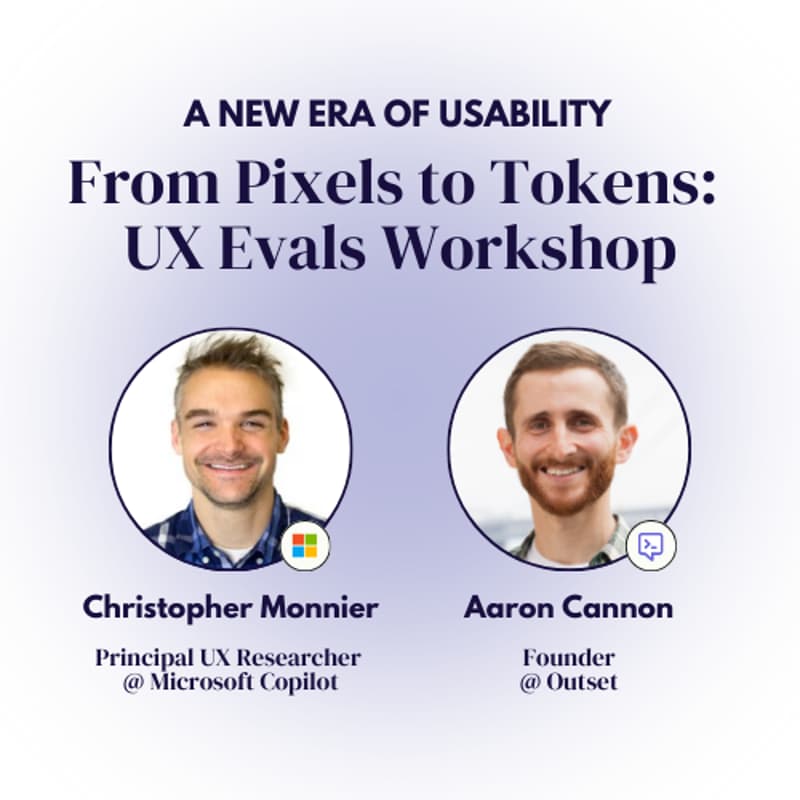

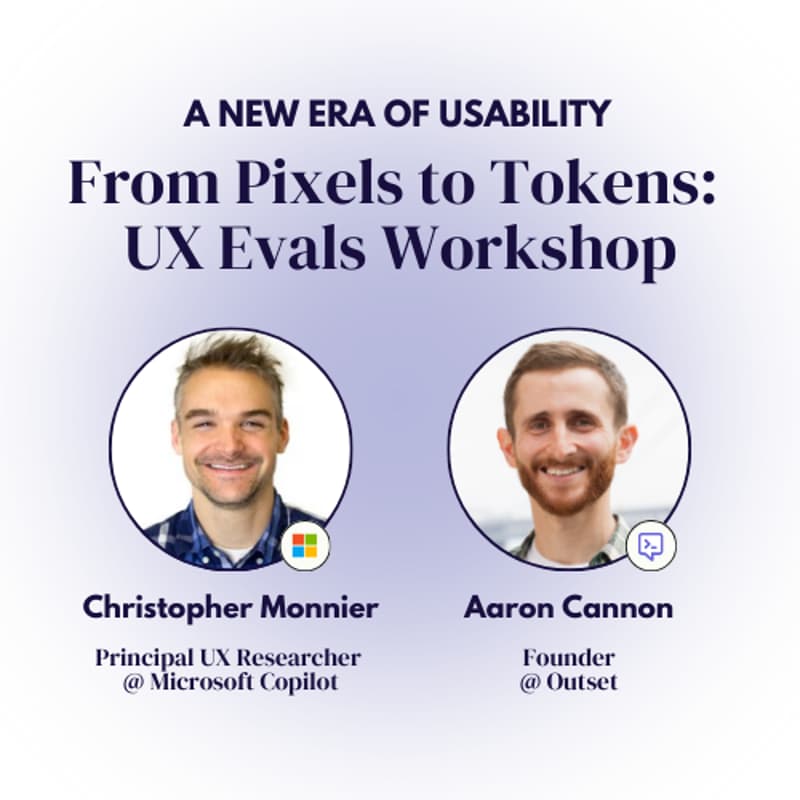

Who you’ll hear from:

Chris Monnier, Principal UX Researcher @ Microsoft Copilot

Aaron Cannon, Founder + CEO of Outset

What you’ll learn:

Why traditional UX research and AI evals fall short for AI-native products

What teams are getting wrong when they equate model quality with experience quality

How UX Evals capture real, first-person, multi-turn interactions

Exactly how to design, run, and operationalize UX Evals on your team

If AI behavior shapes your product experience, you can’t afford to evaluate it with outdated methods.

Join the workshop and learn how to implement UX Evals at your org.