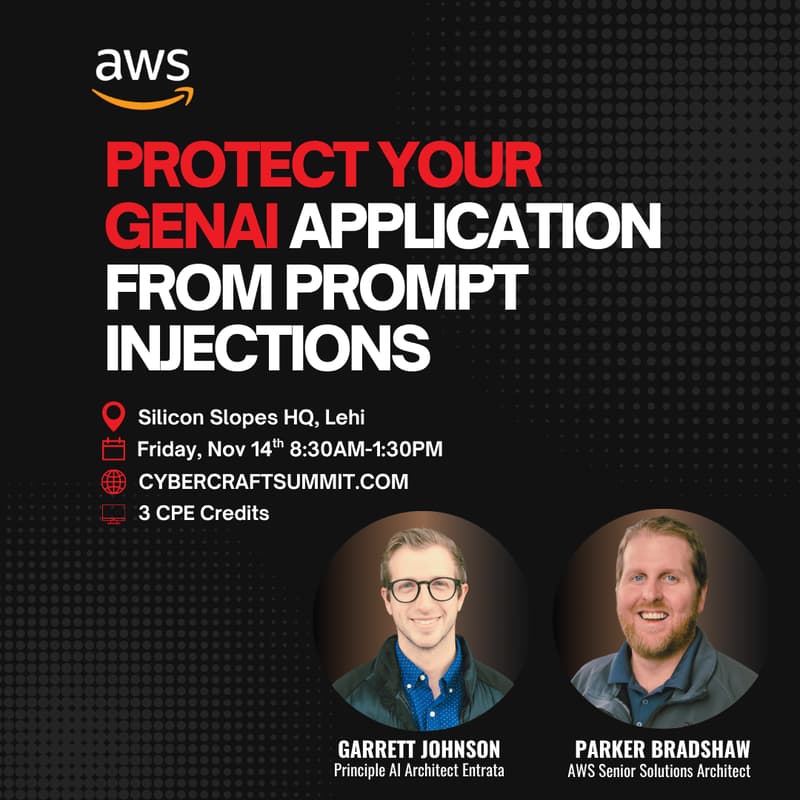

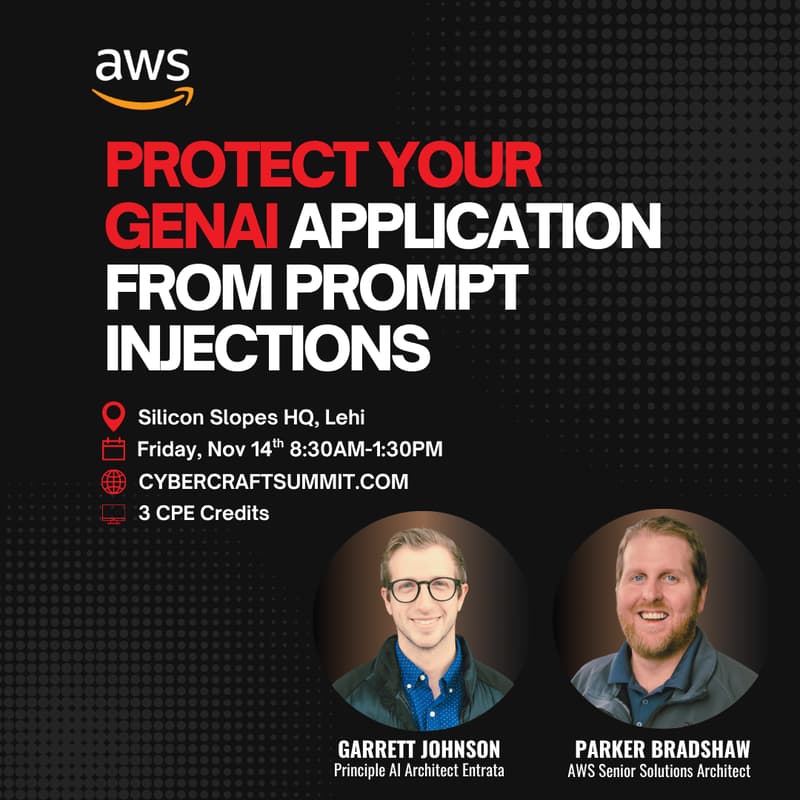

CyberCraft Summit AWS Workshop: Protect Your GenAI Application From Prompt Injections

AWS Workshop at CyberCraft Summit 2025

Protect Your GenAI Application from Prompt Injections

Presented by: Parker Bradshaw, Enterprise Solutions Architect, AWS

🗓 November 14, 2025 | 📍 CyberCraft Summit, Silicon Slopes HQ | 💻 Bring your laptop

About the Speakers

Parker Bradshaw is an Enterprise Solutions Architect at AWS with over five years of experience designing cloud solutions for leading retail and e-commerce brands. He specializes in generative AI and data management, helping organizations deploy scalable, secure, and high-performing AI applications. At the CyberCraft Summit, Parker will share practical strategies for safeguarding GenAI applications from prompt injection attacks, drawing from his extensive experience in building and securing AI-driven architectures on AWS.

Garrett Johnson is the Principal AI Systems Architect at Entrata, where he leads AI strategy. Garrett is 9x AWS certified and is the author of half a dozen architecture best practices published by Amazon.

Workshop Overview

Generative AI introduces new opportunities, but also new risks. In this hands-on AWS workshop, you’ll learn how to identify, exploit, and defend against prompt injection vulnerabilities in GenAI systems using Amazon Bedrock.

Through an interactive “capture the flag” challenge, participants will:

Execute real-world prompt injection and jailbreak techniques.

Explore how LLMs can be manipulated through direct injection, social engineering, and SQL-style exploits.

Implement Amazon Bedrock Guardrails to prevent and detect these attacks.

Use Amazon CloudWatch to visualize and monitor security events in real time.

This workshop blends offensive and defensive prompt engineering—helping you understand both the attack surface and the protective mechanisms needed to secure LLMs in production.

Learning Objectives

Participants will leave this session with the ability to:

Recognize key vectors for prompt injection in GenAI systems.

Apply five distinct attack techniques in a simulated AI banking environment.

Configure custom Guardrails policies and content filters in Amazon Bedrock.

Establish comprehensive logging and monitoring using CloudWatch.

Who Should Attend

This workshop is ideal for professionals building or securing GenAI applications:

Cybersecurity Professionals

AI/ML Engineers

Cloud Architects

DevSecOps Teams

Security Analysts

Solution Architects

Technical Leaders who wants to get some hands on experience

Prerequisites

Basic familiarity with AWS services such as Amazon Bedrock, Amazon EC2, Amazon CloudWatch, and AWS CloudShell will help but is not required.

No prior experience with prompt injection attacks is required. All security concepts will be introduced and practiced during the session.