BLISS Reading Group - Jan 19

This week we are continuing our reading group on Technical Alignment in AI, led by Craig Dickson.

Our paper this week is Goal Misgeneralization in Deep RL (Langosco et al., 2021).

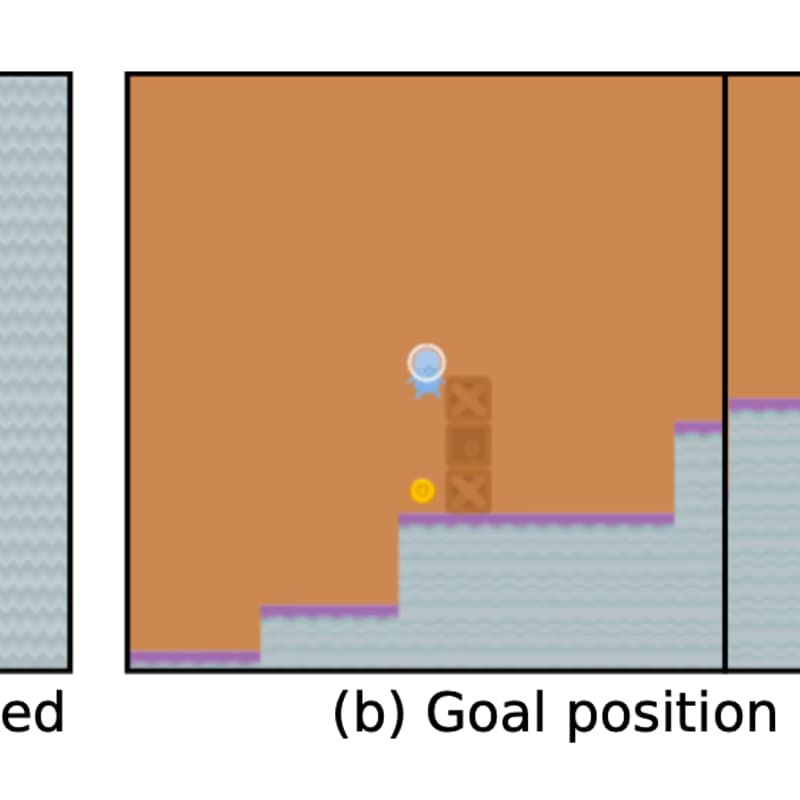

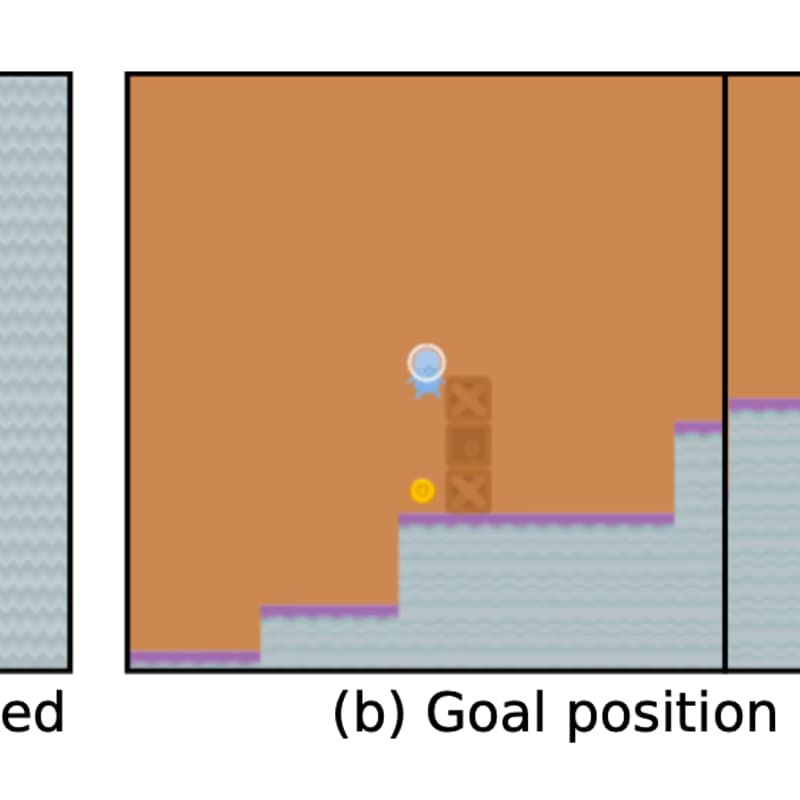

This study from DeepMind and others demonstrated a misalignment issue in a controlled RL setting, rather than just theorizing it. The authors modified training environments so that an agent which learned to navigate to a goal in one setting would pursue a correlated proxy in a new setting (going to the original location even when the goal moved).

The agent’s competences transferred (it skillfully avoids obstacles) but its true objective did not. This competent wrong-goal pursuit is a hallmark misalignment example. The paper also explored partial remedies (like training diversity) to alleviate misgeneralization. We include it in the practical track to represent empirical tests of alignment failures – it’s a relatively accessible experiment that clearly illustrates why aligning the “goal” of an AI is non-trivial even if its capabilities generalize.