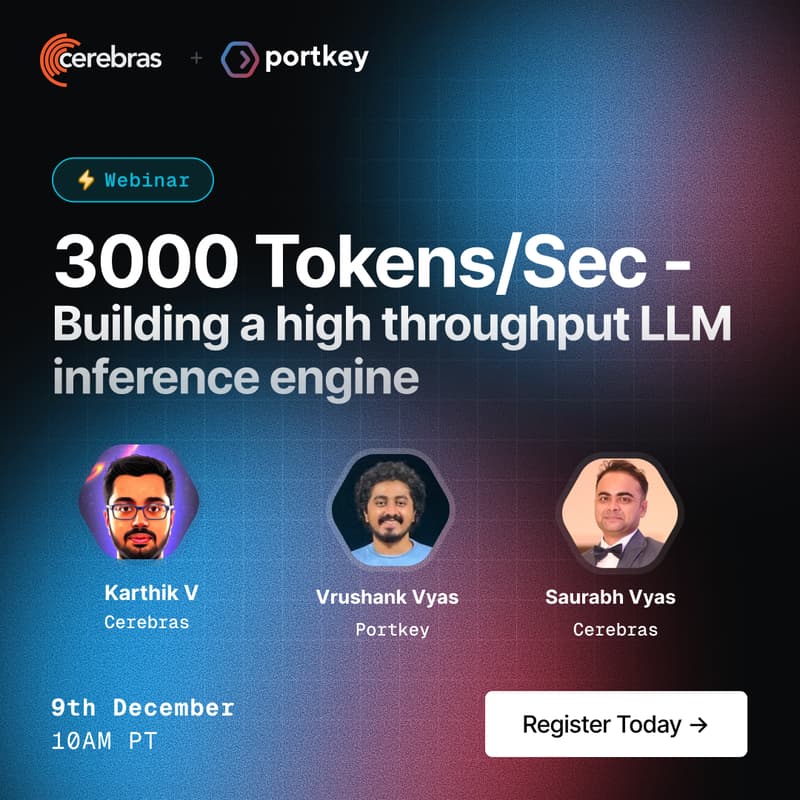

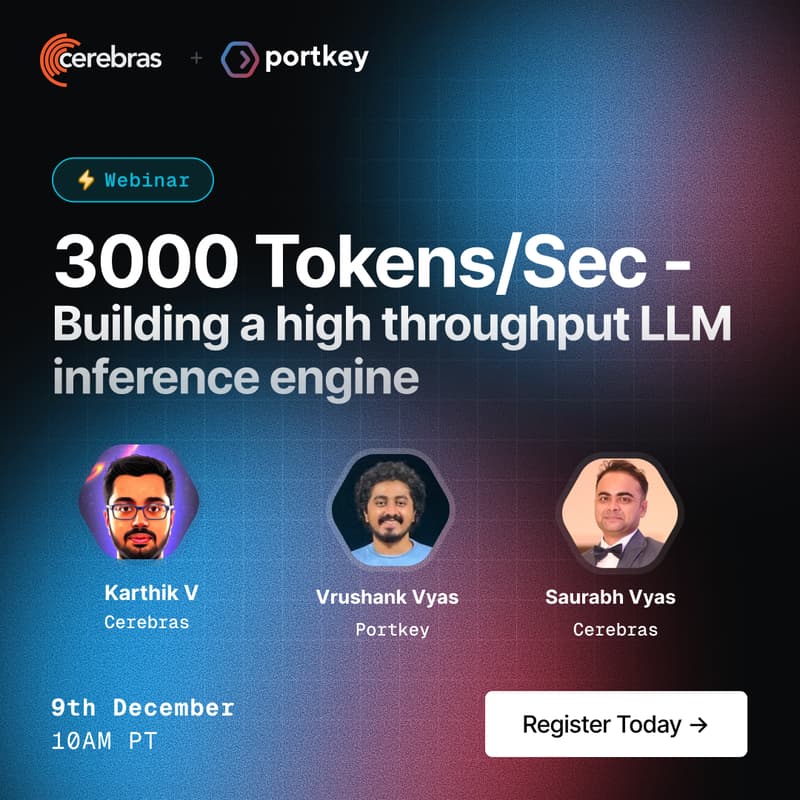

3000 Tokens/Sec - Building a high throughput LLM inference engine

Cerebras has reimagined how LLMs run at scale, delivering throughput and latency numbers that traditional GPU setups can’t match.

In this session, we’ll break down how Cerebras achieves high-speed inference, and how teams use Portkey’s AI Gateway to bring that performance into real applications without changing their stack.

What we’ll cover:

Why LLM inference is slow today — the core GPU bottlenecks and what limits token throughput.

How high-throughput inference works on Cerebras — wafer-scale hardware, on-chip memory, and pipeline execution enabling sub-millisecond tokens.

How Portkey and Cerebras work together — unified access, observability, routing, and production-ready workflows for developers.

Live Q&A with the speakers.

Who should attend:

Teams building high-performance LLM apps, agents, or research systems, especially those looking to reduce latency, scale concurrency, or move beyond GPU-based inference.