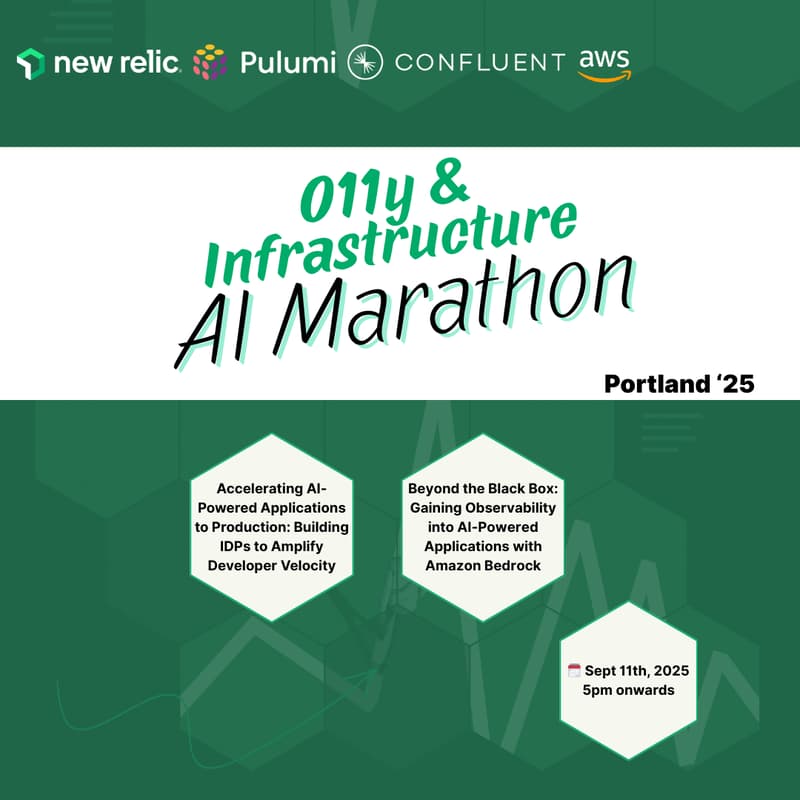

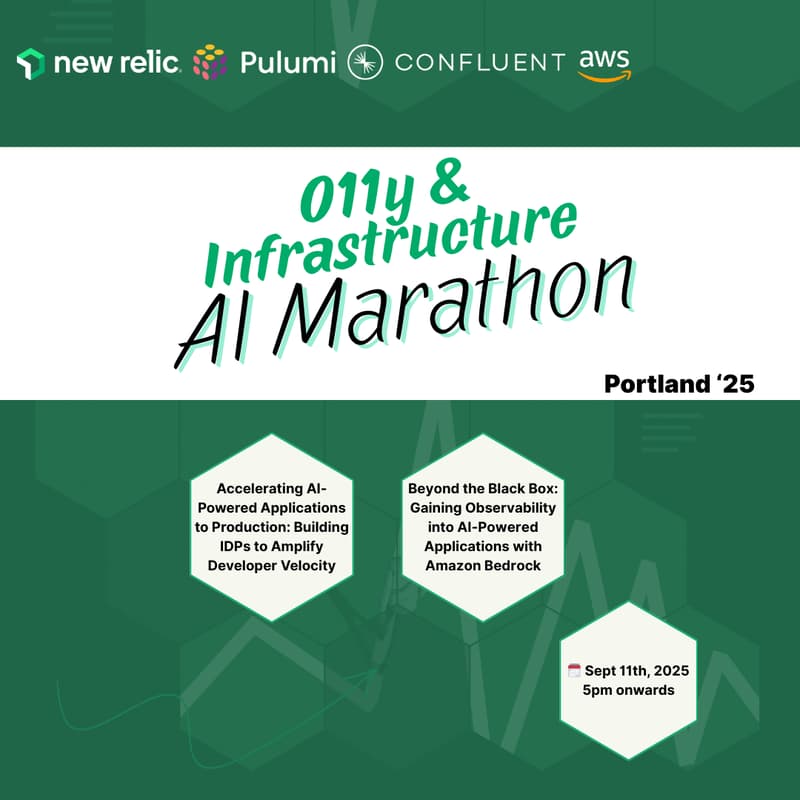

O11y & Infrastructure AI Marathon - Portland '25

Session 1: Accelerating AI-Powered Applications to Production: Building IDPs to Amplify Developer Velocity

AI-empowered developers are shipping applications faster than ever but infrastructure is still the bottleneck. While developers can now generate entire application features in minutes using AI tools, provisioning the cloud infrastructure to run those applications often takes days or weeks. How can platform teams build Internal Developer Platforms (IDPs) that match the velocity of AI-powered development?

In this talk, I'll share how to design IDPs specifically optimized for AI-empowered application developers who need to move from idea to production at unprecedented speed. We'll explore how the five essential IDP components: abstractions, blueprints, workflows, security guardrails, and self-service all must evolve to support the new reality of AI-accelerated development cycles.

Speaker: Eron Wright, Software Engineer at Pulumi

Session 2: Beyond the Black Box: Gaining Observability into AI-Powered Applications with Amazon Bedrock

Amazon Bedrock and Amazon Nova model enables you to build AI powered applications and when they are running in production, this can encounter errors or even simply a better visibility into how the model is performing is important. In this session, we will look at why monitoring is the key for AI applications and when using Bedrock with applications, how AI monitoring gives you insights not only about errors but also AI model performance.

Speaker: Jones Zachariah Noel N, Sr Developer Relations Engineer at New Relic

Session 3: The art of structuring real-time data streams into actionable insights

Real-time data is fast, messy, and often unpredictable - making it hard to use effectively. To unlock its full potential, we need to turn raw streams into clean, structured data that's ready for analysis and AI. In this talk, we’ll show how Apache Kafka, Apache Flink, and Apache Iceberg work together to tame real-time data and prepare it for advanced use cases.

We’ll start by showing how Kafka handles high-throughput data ingestion and how Flink processes and transforms these streams in real time. You’ll learn how to use Flink to clean and structure data on the fly, making it ready for storage and querying. Then, we’ll explore how Apache Iceberg stores this data in an efficient, queryable format - paving the way for analytics, dashboards, and ML pipelines.

We’ll also introduce the Model Context Protocol (MCP) and demonstrate how it enables a large language model (LLM) to “talk” to your data - making it possible to ask questions in natural language through a chatbot interface. While this technology is still evolving, it's a major step toward reducing friction in accessing critical business insights.

Using three live demos - based on IoT sensors, social media streams, and product orders - we’ll walk through how to build a real-time data pipeline from raw input to structured output. By the end of the session, you’ll have a clear blueprint for managing real-time data effectively and preparing it for AI and analytics use cases. Whether you’re just getting started or already building modern data pipelines, this talk will give you practical tools and ideas you can use right away.

Speaker: Travis Hoffman, Senior Executive Advisor at Confluent