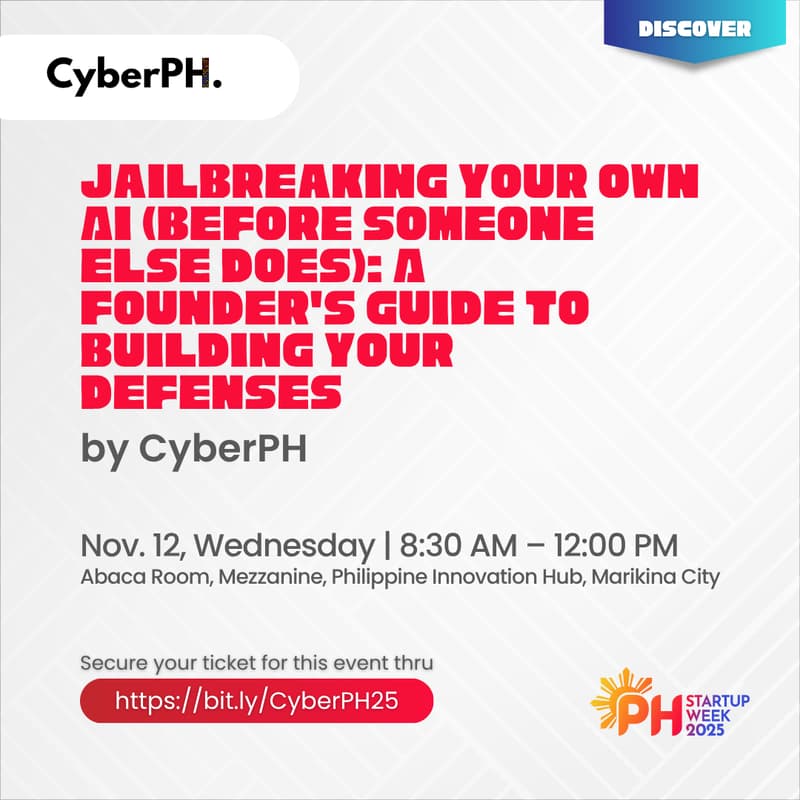

Jailbreaking Your Own AI (Before Someone Else Does): A Founder's Guide to Building Your Defenses.

Your AI can be hijacked with simple words. A scammer could trick your chatbot into issuing a $5,000 flight for $1. A competitor could convince it to leak your customer list. This is an AI Jailbreak, and it’s the most direct threat to your AI-powered business. To defend against it, you must first learn how to do it.

This is a hands-on, non-technical workshop where you will learn to think like an attacker. You will execute your own AI jailbreaks in a safe environment to understand how your product can be manipulated. Once you know the offense, we’ll teach you the defense. You will learn the fundamental strategies and design principles to build a more secure, trustworthy, and resilient AI.

This session is for founders, not coders. Bring your laptop. Learn to break your AI before it breaks your business.

What to expect?

Understand the Threat: To comprehend the concept and severe business impact of AI jailbreaks, including scams, data leaks, and manipulation.

Develop an Attacker Mindset: To learn to think like a malicious actor in order to proactively identify vulnerabilities in an AI system.

Gain Hands-On Experience: To safely execute real AI jailbreaks in a controlled environment, moving from theory to practical application.

Learn Defensive Fundamentals: To translate the knowledge from the attacks into actionable strategies and design principles for building more secure and resilient AI.

Build a Secure Foundation: To empower founders with the knowledge to create a more trustworthy AI product and protect their business from this direct threat.