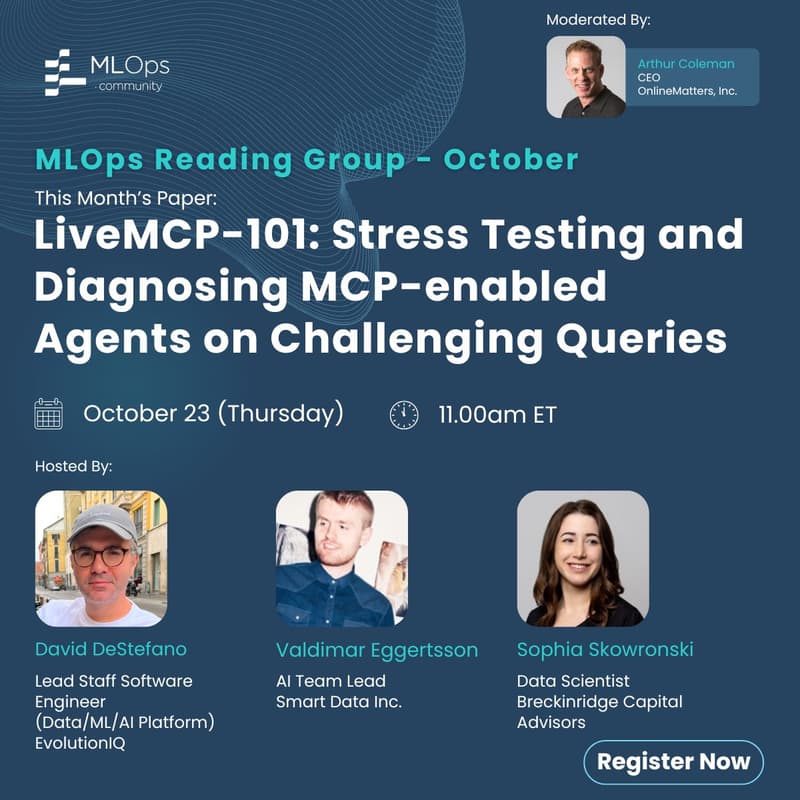

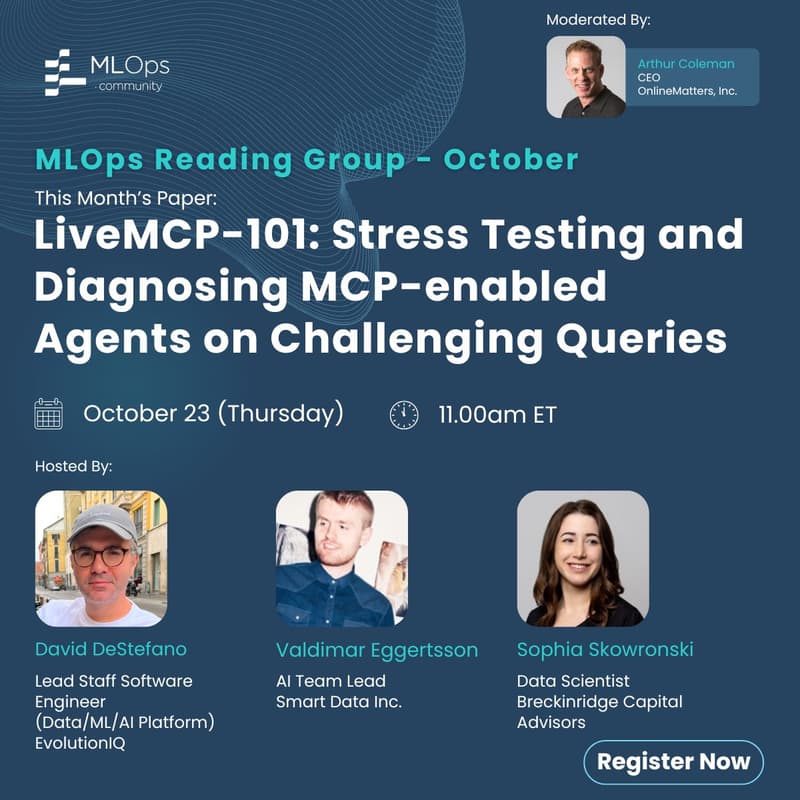

MLOps Reading Group Oct – LiveMCP-101: Stress Testing and Diagnosing MCP-enabled Agents on Challenging Queries

As AI agents become more capable, their real-world performance increasingly depends on how well they can coordinate tools.

This month's paper:

LiveMCP-101: Stress Testing and Diagnosing MCP-enabled Agents on Challenging Queries

introduces a benchmark designed to rigorously test how AI agents handle multi-step tasks using the Model Context Protocol (MCP) — the emerging standard for tool integration.

The authors present 101 carefully curated real-world queries, refined through iterative LLM rewriting and human review, that challenge models to coordinate multiple tools such as web search, file operations, mathematical reasoning, and data analysis

What we’ll cover:

How LiveMCP-101 benchmarks real-world tool use and multi-step reasoning

Insights from the paper’s experiments and error analysis

Key failure modes in current agent architectures

Practical lessons for building more reliable, MCP-enabled systems

📅 Date: October 23rd

🕚 Time: 11amET

Speakers:

David DeStefano (Lead Staff Software Engineer (Data/ML/AI Platform @EvolutionIQ)

Sophia Skowronski : Data Scientist, Breckinridge Capital Advisors

Valdimar Ágúst Eggertsson: AI Development Team Lead, Snjallgögn (Smart Data Inc.)

Moderator

Arthur Coleman: CEO, OnlineMatters Inc.

Join the #reading-group channel in the MLOps Community Slack to connect before and after the session.